Computer comes from the word compute, which means calculation. In the pre-computer era, computer was a term used to refer to people who worked in the field of accounting. When I say ‘clerk’ in Burmese, I mean the person who does the accounting. This is the meaning of computer.

In modern times, “A computer is an electronic device that can perform arithmetic operations at high speed and is also called a data processor because it can store, process and retrieve data whenever desired.” So the phones and tablets we use are also computers. When we say computer, we mean PC (Personal Computer) laptops and desktops. In fact, there are still many computers, large and small, used by the military, large and small, that are common to us but do not know they are computers, and are far from us. So any electronic device that can store, compute, and retrieve data when needed can generally be referred to as a computer.

There is a saying, “Needed is the Mother of Invention.” Computers are also a product of creative needs. As people move from one family to another, from one village to another, from one country to another and from one world to another, as their responsibilities, information, and business structures grow, so does the need to manage a vast amount of information over time. It was these requirements that led to the advent of computers. Various business calculators have been developed over time. Early machines could not be called computers by modern definitions because they could only do positive and negative multiplication.

During World War II, when computers began to be encrypted and encrypted to prevent the retrieval of your information from the enemy as quickly as possible, the need for computers became more and more prevalent. The history of computer invention can be traced back to the pre-World War II period. Computers are derived from Compute, so the first computer to be recorded as a computer that could process data was the Abacus, which appeared about 7,000 years ago, around BC-5000. It can only do arithmetic.

The Analytical Engine was invented by Charles Babbage, a professor at the University of Cambridge (1792-1871), who defined a computer that could store, compute, and retrieve data if necessary. It was built in AD-1834. It was the first digital computer. Sequences that fit the modern definition only appeared around World War II. With the advent of the computer in competition between AD-1936 and World War II, the advent of computer-assisted computation became increasingly complex. There is one thing in this book and another thing in that book. So when it comes to studying the history of a computer after 1936, it is best not to be complacent if you do not have complete information about it. This is because when there are small conflicts while studying, it is not possible to move forward.

Well known computers have been around since 1936

The Z1 was built in 1936 by Zuse and was the first relay calculating machine. ABC (Atanassoff Berry Computer) The team, led by John Vincent Atanassoff, set out to calculate the equations they wanted to calculate. Built using vacuum tubes, it was the first electronic computer to be powered. It is said to have been built between 1937-1938. Some consider the COLOSSUS of the British government to emerge in 1943 as the first electronic computer. As a government computer, I think it is more powerful than ABC.

Mark I was created in 1944 by Harvard University professor Howard Aiken and is the American general computer. Mark I is an electronic device hybrid computer. Another book states that it was built in 1937 and weighed 1,500 tons and was about 15 meters long. ENIAC was founded in 1946 by J. Presparo Eckert and Mauchly. ENIAC is the first generation of general-purpose electronic computer that can be widely used in today’s computers. Another book states that the ENIAC (Electronic Numerical Integrator And Calculator) was built using vacuum tubes between 1940-1941 and weighed about 30 tons. It seems to be different depending on the year of construction and the year of completion.

In 1959, vacuum tubes were replaced by transistors, and the next generation of computers changed dramatically. Computers using vacuum tubes are considered the first generation of modern computers, while computers using transistors are considered the second generation. Transistor computers, such as the IBM 1402, LEO III, UNIVAC, and ANLAS, are more powerful and smaller in size than computers with vacuum tubes.

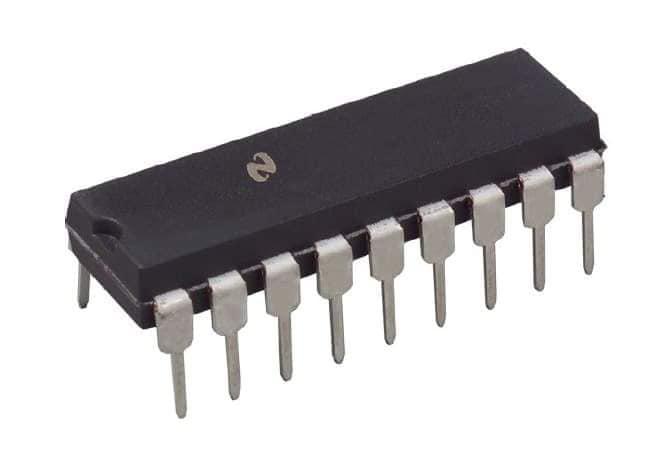

Integrated circuit IC technologies have been around since the mid-1960s, with the advent of IC computers such as the ICL 1900 series and IBM 360 series. The third generation of computers using ICs is considered to be the third generation. Later, with the advent of large integrated circuits in a small space, microprocessor technology developed, and around 1974, microcomputers such as the Silo Z80 and Intel 8086,8088,80286 were introduced. The first microprocessor computer was known as the DEC PDP-1. These microprocessor computers are considered fourth generation.

The fifth generation of computers we use today, which is still looking for innovative ways to make the fourth generation of microprocessor computers as small as possible, is called the fifth generation. It is not yet possible to say exactly when the seventh generation will move, as they are still developing computers that will fit the field they want to use, as they are constantly developing better sound quality and sound quality. The computer age will change when new discoveries in physics make it more cost-effective than building a computer with silicon chips, just as transistors replaced the vacuum.